Control Windows with Your Voice and the Magic of ChatGPT

Voice -> OpenAI Whisper -> ChatGPT -> AutoHotKey Script = Windows Automation

Typing is the most common way we interact with our computers, but we can talk much faster. Even with practiced fingers, we barely type 40 words per minute, yet we effortlessly speak at around 150 words per minute - triple the speed.

However, using our voice to instruct our computers had two major hurdles. Firstly, computers used to be quite awful at transcribing our voices into text. Take Dragon NaturalSpeaking 2006, for instance. It merely decoded our speech with roughly 80% accuracy. I personally found it more time-consuming correcting these minor errors than simply typing the content.

Secondly, the precision required by computers clashed with our naturally imprecise spoken language.

Fortunately, these issues have somewhat disappeared as of late last year, thanks to technological advances. The remarkable OpenAI Whisper model excels at voice-to-text transcription - it even has a grip on my German accent! Meanwhile, OpenAI's GPT-4 model manages to comprehend our natural language usage, translating it into computer-understandable format. It has shown its merit, for instance, through its remarkable code-writing capability.

With these innovations - OpenAI Whisper and GPT - I wondered if I now can control my computer - specifically my personal Windows computer with my voice.

In the rest of this article, I'll explain how I combined the OpenAI API, AutoHotKey and a simple Go program, to control Windows with my voice.

The read-to-use tool and all source code are on GitHub.

tldr;

If you want to see what I developed in action, please just have a look at the YouTube video below.

My tool offers the versatility to trigger numerous actions including, but not confined to, the following:

Launching a programme with the command 'open...'

Hunting for information online with the instruction 'search for...'

Accessing Wikipedia via 'Open Wikipedia page on...'

Engaging with ChatGPT using 'tell me...'

Scouting for images with the command 'search for images of...'

How can I use it?

Simply download the latest release from:

https://github.com/mxro/autohotkey-chatgpt-voice/releases

Instructions for installation can be found here

How it all works?

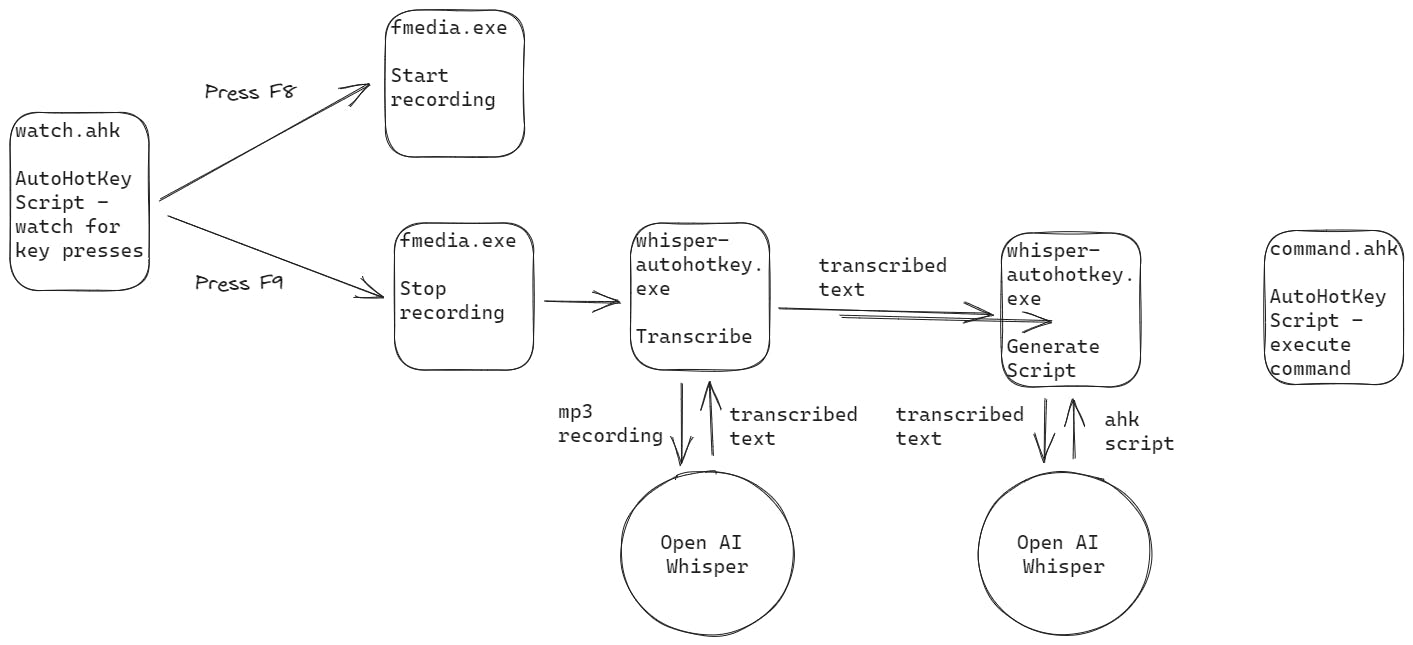

The following diagram shows the different steps this solution executes:

There are three crucial technologies at the heart of this solution.

AutoHotKey is an open-source program useful for creating Windows automations.

fmedia, a tool to record and manipulate sounds.

The 'whisper-autohotkey.exe', a bespoke application I created in Go for communication with the OpenAI API.

This is how they work together:

Step One: Launch an AutoHotKey script and allow it to idly monitor for F8 key strokes.

Step Two: Command Fmedia to begin recording sound when the F8 key is pressed.

Step Three: Instruct Fmedia to cease recording at the next F8 key press.

Step Four: Initiate the custom Go application, 'Whisper-AutoHotKey.exe'. Use it to dispatch the audio file recorded by Fmedia to OpenAI's Whisper API, thus getting it transcribed to text.

Step Five: Convey this transcribed text into the OpenAI Chat GPT API, generating an AutoHotKey script in the process.

Step Six: Run the AutoHotKey executable and input the fresh script, leading to the execution of the required action.

Further explanations detailing the functionality of each component and step are provided in the subsequent section.

AutoHotKey Watch Script

The watch script defines an action that is triggered whenever the user presses the F8 key.

F8::

NotRecording := !NotRecording

If NotRecording

{

Run %A_WorkingDir%\bin\fmedia-1.31-windows-x64\fmedia\fmedia.exe --record --overwrite --mpeg-quality=16 --rate=12000 --out=rec.mp3 --globcmd=listen,, Hide

}

Else

{

Run %A_WorkingDir%\bin\fmedia-1.31-windows-x64\fmedia\fmedia.exe --globcmd=stop,, Hide

Sleep, 100

Run %A_WorkingDir%\bin\whisper-autohotkey\whisper-autohotkey.exe,, Hide

}

return

We first check whether we are currently recording or not. If we are not recording, we use fmedia to start the recording the command:

Run %A_WorkingDir%\bin\fmedia-1.31-windows-x64\fmedia\fmedia.exe --record --overwrite --mpeg-quality=16 --rate=12000 --out=rec.mp3 --globcmd=listen,, Hide

Notice here specifically the globalcmd=listen directive. This allows us to stop this recording through another invocation of fmedia.exe.

Also note the specific quality and sampling rate provided here - I fine-tuned this using a few experiments.

When F8 is pressed and we are currently recording, we use fmedia.exe to send the command to stop recording:

Run %A_WorkingDir%\bin\fmedia-1.31-windows-x64\fmedia\fmedia.exe --globcmd=stop,, Hide

We then give our operating system a little break to write out the recorded file and then run the custom executable whisper-autohotkey.exe.

Sleep, 100

Run %A_WorkingDir%\bin\whisper-autohotkey\whisper-autohotkey.exe,, Hide

The next steps all happen in whisper-autohotkey.exe - a custom Go application I developed.

Transcribe

Within our Go application, we read out the recorded MP3 file and send it to the OpenAI Whisper API, see whisper.go:

func Transcribe(inputFileName string, config Config) (string, error) {

c := openai.NewClient(config.OpenapiKey)

ctx := context.Background()

req := openai.AudioRequest{

Model: openai.Whisper1,

Prompt: "",

FilePath: inputFileName,

}

response, err := c.CreateTranscription(ctx, req)

if err != nil {

return "", err

}

return response.Text, nil

}

We are using the OpenAI API Go SDK here.

Generate Script

Once we have received the transcription, we are calling the OpenAI API chat completion endpoint to generate our AutoHotKey script, see gpt4.go:

func BuildCommand(config Config, prompt string) (string, error) {

if strings.TrimSpace(prompt) == "" {

return "MsgBox, 32,, No input detected! Is your microphone working correctly?", nil

}

systemContext, err := os.ReadFile("./prompt.txt")

if err != nil {

return "", err

}

c := openai.NewClient(config.OpenapiKey)

response, err := c.CreateChatCompletion(

context.Background(),

openai.ChatCompletionRequest{

Model: openai.GPT4,

// https://github.com/sashabaranov/go-openai#why-dont-we-get-the-same-answer-when-specifying-a-temperature-field-of-0-and-asking-the-same-question

Temperature: math.SmallestNonzeroFloat32,

Messages: []openai.ChatCompletionMessage{

{

Role: openai.ChatMessageRoleSystem,

Content: string(systemContext),

},

{

Role: openai.ChatMessageRoleUser,

Content: "ACTION: " + prompt,

},

},

},

)

if err != nil {

return "", err

}

return response.Choices[0].Message.Content, nil

}

Note, we provide most of the guidance for the GPT model here in the system context, which is provided by the following custom prompt (prompt.txt):

You are a Windows automation engineer that is very familiar with AutoHotKey. You create AutoHotKey V1 scripts. I ask you to conduct a certain ACTION. You then write a script to perform this action.

Unless otherwise specified, assume:

the default browser is Firefox

the default search engine is DuckDuckGo

if looking for pictures, open the pexels website

when I ask you to 'tell me X', output a script that shows a GUI window using MsgBox that provides the answer to X.

if no specific action is specified, assume a web search for the prompt needs to be conducted

Your answer must ALWAYS ONLY be a correct AutoHotKey Script, nothing else

Avoid all logical and syntactical errors. To help you avoid making errors, ALWAYS keep in mind ALL of the following rules:

The action should be executed when the AHK script is run, not define a keyboard shortcut to trigger the action.

You only respond with the script, don't include any comments, keep it as short as possible but ensure there are no syntax errors in the script and it is a correct AutoHotKey V1 script.

Tray tips are provided as follows 'TrayTip , Title, Text, Timeout, Options'.

When constructing URLs, ensure to escape the escape sequence for space (%20) as '`%20'.

Apply all AutoHotKey Escape sequences as required.

Replace all '%' characters in URLs with the correct escape sequence '`%'. E.g. '%20' with '

%20NEVER provide any other output than the script. Always complete the action with a 'return'.

If you are not sure what action needs to be taken or how to create a script to perform the action, create a script with the following content:

MsgBox, 32,,[Your comment] Replace [Your comment] with your comment. Also include the prompt as you have received it in the comment.

- If I ask you to Paste something, use the SendInput, {Raw} function.

Now I will provide the ACTION. Please remember, NEVER respond with ANYTHING ELSE but a valid AutoHotKeyScript.

Note, you can easily customise this prompt after downloading this tool by editing the prompt.txt file in the tool folder. For instance, you may want to change the default browser to something else such as Chrome.

Execute Script

After we have generated the script, we then execute it using AutoHotKey, see ahk.go:

func RunCommand(config Config, script string) (string, error) {

if err := os.WriteFile("script.ahk", []byte(script), 0666); err != nil {

return "", err

}

autoHotKeyPath := config.AutoHotKeyExec

if autoHotKeyPath == "" {

autoHotKeyPath = ".\\bin\\autohotkey-1.1.37.01\\AutoHotkeyU64.exe"

}

data, err := exec.Command(autoHotKeyPath, "script.ahk").Output()

if err != nil {

return "", err

}

output := string(data)

return output, nil

}

The generated script will, for example, look like follows:

Run, firefox.exe "https://duckduckgo.com/?q=Whisper`%20OpenAI`%20API`%20Go`%20SDK"

return

Learnings and Limitations

The following are the learning and remaining limitations of the developed tool:

The OpenAI Whisper API can occasionally seem temperamental, primarily due to the data uploading process and the subsequent processing duration.

There's a significant variance in latency, with the API requests sometimes taking up to ten times longer than the average duration. This is common with my basic, consumer-grade account.

GPT-4 fails to excel at writing AutoHotKey scripts, usually falling into basic logic or syntax traps. I tried to bypass some of these frequent blunders by giving the model extra guidance within the initial prompt. However, it struggled with character escaping - a common challenge within AutoHotKey. For example, the character '%' had to be escaped as '`%'.

Conclusion

It's safe to infer that Cortana Voice's capacity to control windows wasn't a hit, given its discontinuation.

Conversely, the tool detailed in this post has been quite useful to me. It's particularly useful for triggering web searches, and bundling multiple steps into a straightforward voice command. This feature absolves me from typing out search queries.

In terms of disadvantages, the major one I've noticed in my personal use is the Whisper API's sluggishness. A noticeable delay ensues between issuing a command and waiting for it to be transcribed, passed to GPT, and finally executing the script. However, I was able to reduce latency by about 50% using some fine-tuning of the audio encoding settings used.

Further, it's worth mentioning that GPT-4 isn't always successful in concocting accurate Autohotkey scripts. My experience suggests approximately a 90% success rate. However, I'm optimistic that further refining the prompt can heighten the accuracy rate.

This tool has been published as an open source project. I encourage you to contribute your observations or thoughts either by visiting the project or leaving a comment on this post.